Paul Warren, research associate at the Public Policy Institute of California

Paul Warren, who was deputy for testing and accountability at the CDE from 1999 to 2003, has written a tough but fair critique of what’s being done with CAASPP results. Public Policy Institute of California (PPIC) published it the last week of June 2018. This is not an academic review. Paul knows how the CDE works. He knows about the delicate matter of political relations with the State Board and the Department of Finance. He was there when the CST was introduced, and the API-centered accountability system was first built. He was among those leading the CALPADS construction project. This means he knows what quality is possible to attain in moments of big system change. His conclusion: the CDE and State Board could have done much better.

His readable 26-page report, “California’s K-12 Test Scores: What Can the Available Data Tell Us,” is written with a gentle hand. His language is moderate. But his findings are dramatic. Paul calls out a host of flaws, but also points to a missed opportunity: why is “growth” inferred by taking kids in grades 3-8 every year, and taking a snapshot of their scores, when you could take individual student results and link them over time? He favors a longitudinal view, and recommends a method of controlling for the unequal value of a scale score across the grade levels. It’s a clever idea: simple enough to explain, sound enough to resonate with parents and educators, solid enough to pass psychometric review.

This is a timely suggestion. The SBE is about to shelve the growth measure recommendation that ETS recommended this spring, called residual student growth. They are about to debate what to do instead at their July board meeting. Let’s hope they pay attention to Paul’s advice.

“Unfortunately, our analysis shows that using CDE group data to calculate growth estimates for most subgroups of students produces inaccurate measures.” –Paul Warren’s PPIC report

The report is a help to many who are governing or leading districts. It summarizes the differences between the CST and the CAASPP. He notes the CAASPP is more “sophisticated” because it is vertically equated. This means results can be compared across grade levels to infer growth. That was not true of the CST.

With a new test designed to make “progress” visible, he is deeply distressed to see the test results misused in ways that prevent accurate estimates of year-to-year growth, and even worse, promulgate misunderstandings with high consequences. Here is where he believes the dashboard based accountability system misuses the CAASPP results:

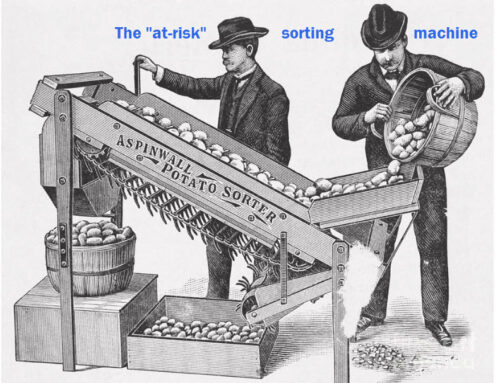

- It compares successive groups of kids in the same grade range. This is an unstable construct. Kids are not widgets. Comparing all kids in grades 3-8 is equivalent to comparing kids in one grade level over time. That was unavoidably necessary in the CST era. It is neither necessary nor desirable now.

- It disregards what he calls “program migration.” Kids move into and out of categories like English learner and special ed. Kids also move into and out of the free-lunch status designation. Why analyze these aggregations of students that change? In referring to the illogic of making a subgroup of ELs, Paul writes: “It is extremely easy to misinterpret what the data mean.” In particular, he flags the misinterpretation of subgroup growth: “Unfortunately, our analysis shows that using CDE group data to calculate growth estimates for most subgroups of students produces inaccurate measures.”

- The lack of cautionary notices by the CDE when they publish the dashboard allows many to reach the wrong conclusion.

The report includes a clever reconciliation of the “All Students” year-to-year change, against the sum of each subgroup’s year-to-year change. In the case of one trial district, San Francisco USD, here’s what Paul discovered: “The weighted average of the seven categories generated a lower All Students rate, equaling 79 percent of the All Students growth rate calculated using district totals.”

I was surprised to see the report make only passing reference to the imprecision of test results. Standard error of about 20 scale score points for most students in the middle two bands is common. This results in a classification error rate of about 20 percent according to the technical tables in the back of the 500+ page SBAC Technical Manual. But this point only reinforces Paul’s conclusions. Test results are already imprecise. Misusing them compounds the imprecision, making incorrect conclusions exceedingly easy to reach.

The report makes a number of analytic observations, after reorganizing the data into graduating class cohorts. These semi-stable aggregations of students can’t control for mobility into and out of the graduating class cohort. But this quasi-longitudinal approach is the best one can make of the data in its current form. (Note, this is the method we embrace at School Wise Press’s K12 Measures Project.)

Those observations are fascinating. The most interesting was the discovery that there is little variation in score levels at the district level for students getting lunch subsidies. But there is substantial variation among those not getting them. His second finding: only 45 percent of districts saw an increase in growth from 2016 to 2017 test cycles. In the prior test cycle, 2015 to 2016, more than 80 percent of districts saw gains. This sag has been noted by many, including Doug McRae who noted a pattern of sagging scores across all SBAC consortium states.

Paul Warren’s work is a warning flag of a serious sort. If dashboards are providing false signals, then his advice to slow down and reexamine the statistical foundations is well warranted.