I spent September 13 at a special event in Palo Alto to discuss the potential for evidence generated when students use online instructional materials. About 30 people gathered from around the country, and they shared deep experience and concern with building better evidence, enabling applied research to improve K12 education. The star of the day was Mark Schneider, the newly appointed chief of the Institute for Education Science. His impatience with high cost, low reward research was palpable. His passion for practical applications of sound research was notable. But the brighter moments for me were the opportunities for dialogue.

I spent September 13 at a special event in Palo Alto to discuss the potential for evidence generated when students use online instructional materials. About 30 people gathered from around the country, and they shared deep experience and concern with building better evidence, enabling applied research to improve K12 education. The star of the day was Mark Schneider, the newly appointed chief of the Institute for Education Science. His impatience with high cost, low reward research was palpable. His passion for practical applications of sound research was notable. But the brighter moments for me were the opportunities for dialogue.

One question that percolated up through my table’s discussion of the barriers to the use of research by educators was prompted by Andy Rotherham, co-founder of Bellwether Education Partners. Andy observed how prone to fads the education world has become. All too often, education policy makers and practitioners flit from one remedy to another, hoping to solve a problem whose diagnosis shifts with the seasons. These leaders have little reason to turn toward research when the next cure-all for whatever ails education beckons. Following fads is simpler than digging up research, and is often rewarded with praise from peers.

This led me to share an observation of my own about a fad I’ve long been suspicious of: “best practices.” In 20 years of serving California district leaders, I’ve noticed that “best practices” is almost always embraced as a method for finding solutions to local challenges. Why does anyone think education challenges are so simple that all it takes is knowing the best treatment for the ailment?

Yet, the appeal of simplicity exerts a powerful gravitational force. Complexity repels most people, especially those busy managing schooling. “Best practices” can’t be hard to find. Troubled by your district’s difficulty reclassifying students you think are English learners? Just Google “best practices for teaching English learners to read and write English” and “voila!” If there are best practices, you should find them there.

Well, if you think that research is too hard to find, harder to understand, and nearly impossible to apply in practice, then what’s wrong with turning toward “best practices?” Just four things.

- Life is messy. And the education of young human beings is complicated. You can’t distill the improvement process into a cookbook. You can’t turn to a list of best ingredients, and then combine them in the best way, and think you’re going to get the best results. Students aren’t baked goods. Teachers aren’t pastry chefs. And instructional methods aren’t like whisking egg whites.

- What’s “best” for one school’s concerns is not likely to be “best” for another. Any improvement process requires a firm definition of the problem. That means evidence of high quality, highly localized to your circumstances, applied with high fidelity to a smart implementation plan. Failure can occur at any point in that chain of four elements. If you don’t believe me, read “Learning to Improve: How American’s Schools Can Get Better at Getting Better” by Byrk, Gomez, Grunhow and LeMahieu.

- Worst practices are visible all over the K-12 landscape, some of them even baked into policy. A “worst practice” in K-12 is the equivalent of a nurse not washing his or her hands after changing a patient’s bandages covering open sores. It’s obvious that the next patient that nurse visits is at risk of an infection. A “worst practice” in K-12 is using a test like CELDT to confirm that a kindergartner or first-grader is an English learner, when the test has a 30 percent error rate, according to Stokes-Guinan and Goldberg (2011). A second study I read offered harsher criticism, claiming that when the CELDT was given to a large panel of young students who grew up in families where only English was spoken, three out of four of those students failed the CELDT. I hope you are as shocked as I was when I first read the research.

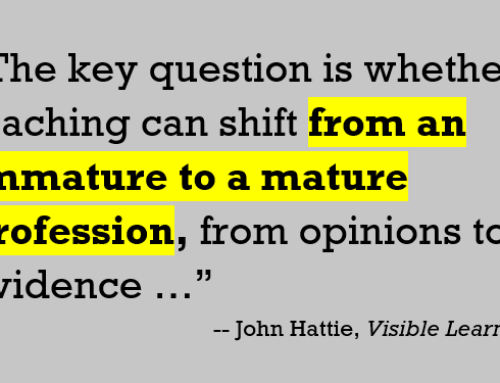

- Too many self-proclaimed “best practices” can’t be confirmed with evidence. I’m not a pointy-head research snob who believes high quality evidence only results from large scale, randomized control trials. But I am an evidence guy. I’m talking about the absence of evidence to confirm a “best practice.” And I’m talking about faulty logic applied to reasonable evidence. Both result in wrong inferences. Scratch the surface of the original research that justified the claim of many “best practices,” and too often you’ll find small samples, shoddy methods, and low effect sizes.

So back to this one-day conference. The suspicion I voiced about “best practices” was affirmed by everyone at the table. And one participant at our table chimed in that she was part of a research team that was studying the fallacies of “best practices” on an international scale. How pleasing to discover I was not the only one who was skeptical.

So if you’re involved in a community of practice, and are striving to bring improvement science methods to bear on your shared problem of practice, brace yourself. You’ll need to fine-tune your b.s. detectors, and be alert for the quick fix, the shake-and-bake solution of the day. Get ready to wrestle with monkey demons, wrangle with gnarly data, and question evidence like dashboards that promise simple answers and pretty colors.

What can you do in the meantime that’s constructive? Enlist education outsiders to help you identify suspected worst practices. Like those that waste time and money. And then get your district to stop doing them. The next time someone advances a “best practice,” I suggest you ask them “Where’s your evidence? What is the quality of your evidence? And how do you know your inference is correct?”