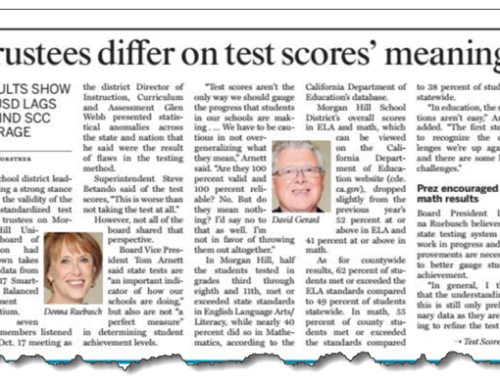

Every time I hear the term “at-risk,” I wince. It’s usually an attempt by educators to evade saying that students whose parents aren’t well-off or college-educated, or who speak Spanish at home, are likely to score lower on tests or drop out. But recently I did more than wince when I read this article in the NY Times (10/11/24), “Nevada Evaluates At-Risk Students Using AI.” The article revealed that Nevada’s education leaders were using artificial intelligence methods to identify students who may be “at-risk.” Their concern was that in 2022, about 270,000 students had already been identified by the state as “at-risk.” The sole criterion: household income. All students who qualified for subsidized meals were considered to be “at-risk” of some vague school related trouble.

Of course, this was expensive, since extra funds flow to schools for each of the students tagged with that label. In an effort to bring that number down to the range of affordability, state education leaders used 75 data elements about students to get the number down to a more manageable 65,000. This attempted improvement was wrong in two ways. First, it uses far too many data elements. Stat-heads will expect low marginal gain in precision after using the most powerful three or four elements. Second, it’s wrong because their predictive model throws into the mix a student’s ethnicity and subsidized meal eligibility.

There are ways to do this right. You could analyze individual student’s behavior: days in school, unexcused absences, suspensions. You could analyze individual student’s grades and test results. These are bits of evidence that are student specific, and vary considerably, person-by-person.

Educators automate flawed reasoning to determine who’s “at-risk”

But there are also ways to do it wrong. You could pay more attention to accidents of birth: ethnicity, parents’ education. You could pay more attention to accidents of circumstance like household income or country-of-origin. These are among the 75 elements in Nevada’s predictive algorithm. What weight do Nevada higher-ups assign to these factors? They have not revealed it. They are protecting it, as if it were a prized family recipe.

Relying on these group characteristics to predict individual behavior also means relying on a logical fallacy, that the average attributes of a group accrue to each of its members. This fallacy, termed the “ecological inference fallacy,” is also one of the elements of ethnic stereotyping (e.g., “Of course Riley drinks too much. He’s Irish, you know.” ad nauseum).

What’s most troubling is that the auto insurance field figured out how to avoid this fallacy by individualizing insurance rates 16 ago. The old approach was to assign a prospective customer a risk score, based on their zip code. Why zip code? Because the analysts and executives of insurance companies believed that people who lived near each other were more or less equally likely to get behind the wheel of a car and cause an accident. (An example of what some statisticians call the tyranny of the average.)

Auto insurers use technology more wisely to individualize rates for drivers

But in 2008, a firm called Progressive Insurance stepped apart from this tradition, and launched an individualized approach to the pricing of policies. They believed that your actual driving behavior was a better indicator than zip code of your likelihood to get in an accident. Individual behavior drove their pricing, not zip code. They called it MyRate, and later rebranded this as Snapshot. No surprise, customers showed their appreciation for this sensible form of fairness by buying Progressive’s policies.

Why can’t education administrative leadership learn from other fields how to apply computer technology appropriately? Isn’t it wiser to estimate the risk of a student dropping out by their individual behavior and their grades, than by inferring their behavior based on the color of their skin or the household income their parents earn? Simply automating a sorting process based on the fallacy that all individuals in a group embody the average attributes of that group is just speeding up a stupid decision-making machine.

This is not a moment for Inspector Gadget-inspired, artificial intelligence tricks. This is a moment for human intelligence: clear-headedness, logical rigor and an enlightened view of how and when to extend the power of our minds with computer software.