Times are strange. Scholars in this post-enlightenment era are supposed to favor reason and champion scientific inquiry. Evidence is the raw material for science. One would hope that reasoned arguments also rest on evidence. That’s what tests produce. They make student learning visible. So why would any scholar oppose tests, particularly the interim assessments designed to measure the progress of student learning?

I can only invite scholar Heather Hill (Harvard Graduate School of Education) to explain herself. In a February 7, 2020, Education Week essay, she asserted that interim testing “has almost no evidence of effectiveness in raising student test scores.” She recently reaffirmed this odd belief in February 2022 presentation at the Research Partnership for Professional Learning. Reporter Jill Barshay from the Hechinger Report quoted her as stating, “Studying student data seems to not at all improve student outcomes in most of the evaluations I’ve seen.”

This is a straw-man argument. Interim assessments aren’t magical. Tests don’t improve student learning. What teachers do with the feedback tests provide about student learning is what matters.

This is a straw-man argument. Interim assessments aren’t magical. Tests don’t improve student learning. What teachers do with the feedback tests provide about student learning is what matters.

Here’s her line of argument. First, she begins with a hypothesis that students who take interim assessments should show higher gains on state assessments than students who don’t take interim assessments. Then she reviews 23 research studies conducted over the last 20 years. She finds three of the 20 with a large enough number of students in the study to reduce the risk of random error to less than 5 percent. Two of those three indicated slightly positive results (slightly higher rates of growth for students who took interim tests). Despite this confirmation of small but positive results, she concludes the opposite. Overall, considering all 23 studies, she concludes that “… on average, the practice seems not to improve student performance.”

But what practice is she really referring to? Later in her article, she clarifies the practice that she believes has no benefit to students.

“Administrators may still benefit from analyzing student assessment results to know where to strengthen the curriculum or to provide teacher professional learning. But the fact remains that having teachers themselves examine test-score data has yet to be proven productive, even after many trials of such programs.” [emphasis my own]

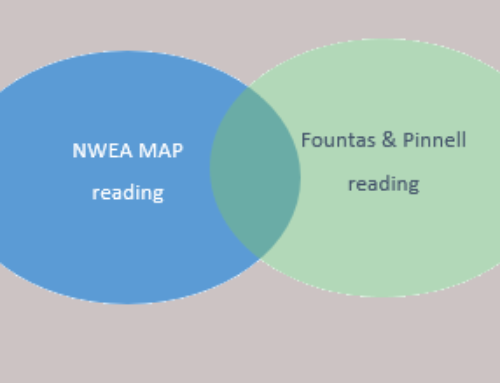

It turns out that Ms. Hill’s objection isn’t to interim testing per se. She objects to teachers investing time trying to interpret results! Her view is that at best, this is wasted time. This is an entirely different conclusion than saying the tests have no benefit. It isn’t the tests themselves that have a null effect. It is what teachers do (and don’t do) with them.

Heather Hill is not alone. Reporter Jill Barshay quotes Susan Brookhart — professor emerita at Duquesne University and associate editor of the journal Applied Measurement in Education — as having reached the same conclusion. “Research does not show that using interim assessments improves student learning. The few studies of interim testing programs that do exist show no effects or occasional small effects.” Brookhart, together with co-author Charles DePascale, wrote up their findings for a chapter in an upcoming new edition of the book Educational Measurement.

When I look at the findings of these scholars, I reach a different conclusion. If teachers’ efforts to interpret evidence of student learning isn’t resulting in higher test scores, perhaps the evidence is being mismanaged, misinterpreted, and misused. What Ms. Hill gleans about the quality of teachers’ analytical skill from these 23 studies is well warranted. I’ve seen administrators reach mistaken conclusions about the meaning of test scores more often than I’ve seen them reach reasonable conclusions. The problem is pervasive. So on this count, I agree with Hill, Brookhart and DePascale.

Why is the interpretive responsibility on teachers’ shoulders? Why don’t districts expect their assessment leaders to be the first-line interpreters of results? Analytic judgment is a rare skill. It doesn’t result from taking a mixed methods course in a master’s program. (See this blog post of mine, which makes the case for putting analysis responsibility primarily in the hands of assessment directors.)

Author and scholar Jenny Rankin has a scarier view. In her 2013 study of over 200 California teachers, she found that under the best of circumstances half of them interpreted state assessment results incorrectly! Under normal circumstances, teachers’ interpretive error rate was even higher. (This blog post explains her findings.) Would you want to be the patient of a doctor whose rate of diagnostic accuracy was 52 percent on a good day?

Let’s pause and look at the evidence. If students who take interim tests show little benefit, and if teachers misinterpret results often even after discussing them with colleagues, this shouldn’t lead to test-bashing. It should lead to a review of who’s in charge of test quality and interpretation.

Heather Hill points out that teachers continue to seek guidance about how to turn test evidence into new instructional approaches. Perhaps that’s where leaders need to invest more heavily. And perhaps they’d do well to redefine the job description of their assessment directors, and emphasize the value of correct interpretive judgment, and deemphasize test logistics.