For more than 20 years, higher-ups have been urging educators to “just use data” when making decisions. But almost no one has dared study what happens when teachers do what they’re told, and use state assessment data to make decisions about their students.

Jenny Rankin, whose research revealed how often teachers draw the wrong conclusion from test data when left to their own devices.

Jenny Rankin is one of the few who dared ask this question. What she discovered was that teachers, when left to themselves, misinterpreted the meaning of student test results nearly half the time. OK. I exaggerated. Their error rate was 48 percent. That’s the bad news.

She discovered this shocking fact while doing her dissertation research. Her hunch that teachers were ill-prepared to reach sound conclusions was well informed. She worked for years as an assessment lead in Saddleback Valley USD. Because she worked with teachers and principals at the site level, she saw how frequently these errors occurred. When she decided to study this in earnest in 2011, she had also been part of the creation of Illuminate-Ed, where she had a rare opportunity to see how thousands of teachers used their assessment management systems.

The good news is that when teachers were given user guides, more descriptive column heads and footnotes, and an appendix for reference, their error rate was reduced to about 15 percent. That’s about the same as the rate at which doctors in hospitals misdiagnose their patients’ ailments (8 to 15 percent).

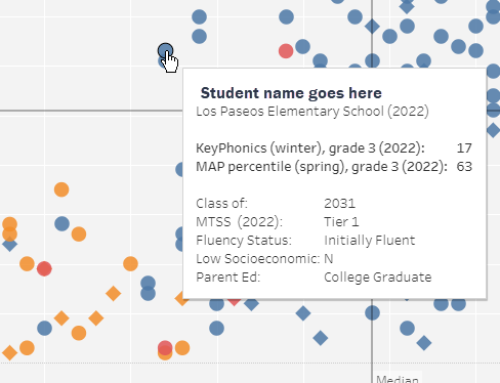

Jenny didn’t cut corners with this study. She recruited over 200 K-12 educators (the vast majority of whom were teachers), enough to be able to be sure that results weren’t due to chance. She used the prevalent report displays that most California teachers used to interpret student, class and grade-level results of the California Standards Test and California Basic Educational Skills Test. And she designed a series of questions that were fair, clear and unbiased. Yes, I reviewed the questionnaire. (Note, in the interest of full disclosure, Jenny Rankin is a member of our PD delivery team, and a friend.)

Her findings encouraged Jenny to codify the standards for quality reporting, applied specifically to educational assessment. The book’s title: Standards for reporting data to educators: What educational leaders should know and demand. Her publisher, Routledge/Taylor & Francis, published this book in 2016. She knows what works, and the impact of these publishing standards on improving the human judgment of those who interpret them. The same year, her publisher brought out a companion guide, Designing Data Reports That Work.

Here’s what makes her discoveries so important today. Her findings remain unheeded. Why are assessment reports still so poorly presented by those who make them? Why are psychometric principles, like the uncertainty of results, so often ignored? Why are the institutional buyers of these reports not demanding better quality reporting? And why aren’t districts and state departments of education providing help desks staffed with pros who can answer questions about the meaning of students’ test results.

Schools of education and professional associations also share blame for the current state of abysmal assessment literacy. Why aren’t there courses in assessment literacy required for those seeking teacher credentials? Why aren’t courses in analytic reasoning, planning and quantitative methods required for those seeking administrative credentials? Why aren’t the professional associations like ACSA and CERA urging their members to get assessment and measurement savvy?

I have a private hunch about all this. I think that the education management profession is privately relieved when errors in professional judgment cannot be seen. In fact, the word “error” doesn’t appear much in education management journals, magazines or association publications. Even though errors are an accepted part of every other discipline, they are apparently banned from education. In some professions like medicine, errors are studied as learning opportunities, and written up as case studies. If K12 leaders don’t recognize errors, they certainly deny themselves the opportunity to learn from them.

If district leaders were told by their school boards to stop pursuing “best practices,” and start pursuing error reduction, I bet that errors would start being noticed. Errors like misdiagnosing students (i.e., assigning students to special ed when in fact students have a reading problem); errors in categorizing students (i.e., flagging a student for a Tier 2 reading intervention when in fact the child has a vision impairment); errors in wasting instructional time; errors in purchasing a weak instructional program; errors in failing to detect which elementary teachers are unqualified to teach math; errors in choosing to not screen kindergartners and first-graders for dyslexia …. The list is a long one.

Jenny Rankin has opened a door to a conversation. Who’s ready to walk through that door, sit down at her table, and join in?