Napa CoE is taking new steps to help its districts build better LCAPs

Supt. Barbara Nemko has taken steps to help Napa County districts plan smarter. Barbara Nemko, Napa CoE’s superintendent, wants districts’ plans to get smarter, and she’s ready to shoulder more responsibility for making that happen. Already she's invested in better evidence for planning, and in analytic support and coaching for her LCAP chief. And she's welcomed district leaders [...]

Why writing an LCAP in this pandemic is an opportunity to transcend the status quo

I have a hunch that you may not be looking forward to starting your district’s new three-year LCAP. You’re up to your eyeballs with short-term planning for getting instruction delivered. Who’s teaching remotely? Who’s teaching in classrooms? How is remote assessment working? Who’s the best teacher in our elementary team at delivering instruction via Zoom? It makes three-year planning seem [...]

Let’s stop trying to turn teachers into analysts

In ten years, will historians look back at the 30-year effort to get teachers to interpret test data as a failure? I’m impatient. I don’t want to wait ten years. Although I’m no historian, here’s my verdict. Yes, it was a failure. This long push to make teachers do “data-driven decision-making” has flopped. But of all those who share responsibility [...]

Who’s gathering diagnostic evidence when schools open?

This is a story of connecting the dots, of diagnostic riddles. This is a story about the value of practicing science rather than teaching science. Yogi Berra, Florence Nightingale, and a doctor who cares for the homeless have some guidance for you. A doctor working at homeless shelters in Boston named Dr. Jim O’Connell noticed an odd pattern. By the [...]

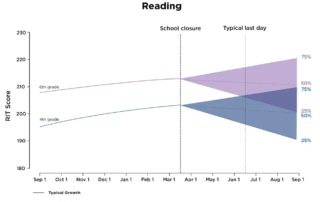

Prepare for surprises when you assess students in the fall

I am weary from reading predictions of pandemic-related learning loss. They all share two assumptions I question: (1) that missing days of school must mean losing knowledge and skills, and (2) that when students eventually take interim assessments in the fall, they will all show declines relative to their prior score. Many of those asserting this learning loss cite no [...]

Measuring gaps the right way: Stanford’s Educational Opportunity Project

Although the California Dept. of Education’s Dashboard continues to mismeasure gaps, a team of social scientists at Stanford are interpreting gaps wisely. Meet Sean Reardon and his talented colleagues at Stanford’s Center for Education Policy Analysis, the Stanford Education Data Archive, and the Educational Opportunity Project. I’m not simply applauding the quality and quantity of research their team is producing [...]

Gap measures in the Dashboard are wrong

As education leaders declare their support for equal justice, and repeat their commitment to reduce the achievement gap, they are hard-pressed to answer the most direct questions about that gap in their own districts. “How big is the math achievement gap, based on students’ ethnicity?” “Does it grow larger or smaller as students move from elementary through middle school?” I [...]

Three dimensions to parent and student engagement well suited to this COVID-19 era

The moment in the fall when school opens will be a moment of reckoning for districts. They will learn to what degree their parents and students have confidence in their abilities to minimize the risk of infection with COVID-19, and maximize the learning opportunities. It's a measure of parent engagement districts will take seriously. Funding will hang in the balance. [...]

The root of “accountability” is counting

What do the CDE and CDC have in common? They share a problem counting people correctly. The Centers for Disease Control’s (CDC’s) counting problem was that they were pressed by the White House to boost their testing numbers. So a deferential functionary in the Centers for Disease Control had the not-so-bright idea of merging two utterly different tests: the swab [...]

Norms, test results and Lake Wobegon: a cautionary tale

As you are putting finishing touches on your district’s LCAP or your site’s SPSA, I hope you're valuing your interim assessments more highly. With this April's CAASPP/SBAC testing cycle scrubbed, that’s all you’ll have to work with next year when drawing conclusions about student learning. Making sense of those results at the school or district level requires the same knowledge [...]

Free of the CAASPP/SBAC, and free to create new evidence of student learning

The appearance of the COVID-19 virus has led to the sudden disappearance of the CAASPP/SBAC tests here in California. Other states have also lost their spring standardized tests. Is this a moment to dread the loss of one estimate of students' academic progress? Or is this a moment to welcome the use of other measures to estimate students’ level of [...]

From virus infection rates to students’ test scores … How interested in the numbers are we?

Now that I’m stuck indoors, I’m reading more, especially news stories that attempt to squeeze meaning from numbers about infection rates, projected deaths, hospital beds, respirators … Yes, it’s gruesome, but I am curious to learn how everyone is trying to make sense of the data. Most everyone is wondering: “What do the numbers mean, and what should I do [...]

The Legislative Analyst’s Office Smart Ideas About LCAPs

Not far from the Capitol Building where legislators make laws, and not far from the CDE’s tower where higher-ups make policy, sits the Legislative Analyst’s Office (LAO). That’s where more than 50 smart and rational souls critique sloppy bills and clumsy policies, with only one concern in mind: whether those bills and policies are best for Californians. They look at [...]

Would you use flawed evidence as the foundation of your school and district plans?

Supt. Fred Navarro at Newport-Mesa USD has taken a stand on the question of the quality of evidence. He has told his planning teams to use no bad evidence. That includes demoting the Dashboard, as needed. But why is this prudent and reasonable step noteworthy? It's unfortunately noteworthy because the CDE has built a Dashboard that produces flawed conclusions from [...]

Let’s get real about principals’ school site plans

I’ve recently returned to the side of principals, helping them build site plans that mean something. This is the third year our K12 Measures team has provided support to planners, and I’m moved to say out loud what I’ve kept too quiet in prior years. It is not fair or reasonable to call this "planning." Principals are not given full [...]

When Teachers Are Left Alone to Make Sense of Test Results, Half Get It Wrong

For more than 20 years, higher-ups have been urging educators to “just use data” when making decisions. But almost no one has dared study what happens when teachers do what they’re told, and use state assessment data to make decisions about their students. Jenny Rankin, whose research revealed how often teachers draw the wrong conclusion from test data when [...]

Dashboard flaws: why all those colors instead of numbers?

I’m feeling very blue today. I vote green. And I stop for red lights. When I tell you what I do or how I feel using a language of colors, I communicate okay. But when I’m counting things, I use the language of numbers. How full is my gas tank today? Three-quarters full. How many days until my daughter’s birthday? [...]

Another CERA conference without debate, dialogue or disagreement

Each November, I go to the annual conference of the California Education Research Association hoping that it will take a stronger leadership role, more independent of the CDE, and more ahead of its members. But again this year, my hopes for the organization were not met. And this was a year when leadership was sorely needed. In a moment when [...]

A Great Plan Wins High Praise: Morgan Hill’s LCAP Wins CSBA’s Golden Bell Award

Planning doesn't excite many K-12 leaders. But in the south end of Santa Clara County sits Glen Webb, who is CIA director (curriculum, instruction and assessment) for Morgan Hill USD. He gets excited by planning, and his years as a science teacher equipped him to plan like a scientist. This year, his plan won kudos from the Santa Clara county [...]

The point of visualizing data is discovering human stories

I just returned from the annual conference of the California Education Research Association, feeling both pleased and disturbed. What pleased me was the conference theme itself: the power of visualizing data. That’s an entirely suitable topic for the members of an organization in charge of communicating the meaning of quantitative measures. What disturbed me was that the presenters I heard [...]